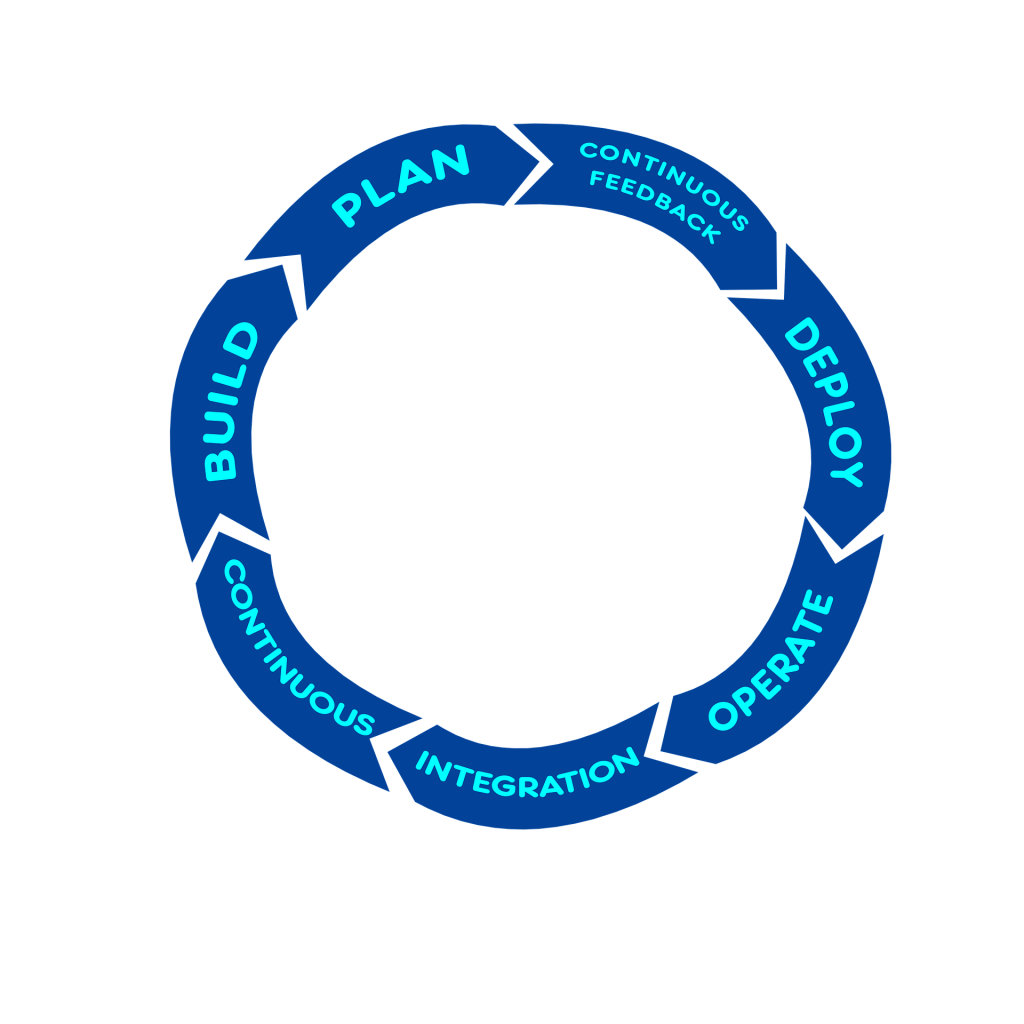

For two and a half years I was the technical lead responsible for the operational management of a global platform running a vendor provided software product for a Fortune 100 insurance company. That’s a mouthful. In simpler terms, we did DevOps for a vendor provided enterprise content management system. The team consisted of between 8-10 people, including 3-5 in Northern Ireland. This included a scrum master and product owner, so generally we had around 8 developers at any given time. The following is a summary of the DevOps lessons learned.

The application was a pretty standard 3 tier web application, although it was a bit slow to deploy and come online. We used standard AWS CloudFormation templates and shell scripts to automate the deployments to AWS. The configuration was highly available. Initially creating 2 load balancers, 2 application servers, 2 search servers, and a multi-AZ RDS database.

When I first joined the team, there were 2 separate code bases and a third in development. Job one was two merge these 3 lines into a single code base for maintainability and reduction of effort. It was tedious and time consuming to make bug fixes in three separate place and test each.

Lesson 1: Keep a single code base if at all possible. Use configuration to handle different versions, options, and other differences between deployments for things like dev, test, and prod environments.

The next challenge we ran into was that there was only a single shared development environment. You can imagine, given the number of developers that waiting for infrastructure to deploy caused some backups and delays. The application itself was slow to deploy and took up to 30 minutes to come online. That means a single change to the code that needed to be tested could take a developer an hour to test manually. We quickly moved to a one environment per developer model. This has pros and cons. The biggest pro being productivity, the biggest con, being cost, which I will discuss more later.

Lesson 2: Each developer needs to be able to deploy and test their changes without waiting for others. Ideally individual development environments are needed.

The next hurdle was automated testing. There were no automated tests in place so each change required manual testing, usually with varying degrees of diligence. We put a system of nightly tests into place to build and deploy the code. This would test both a clean new deployment as well as a redeploy. The automated test suite would test basic application functionality through Postman, an HTTP API testing tool, as well as other lower level tests to insure that the infrastructure was correct. Ideally, we would have had true CI/CD where each code commit kicked off a test suite. This was difficult due to the length of time testing took. A brand new deployment took more than an hour to create all of the AWS resources and deploy the application. A redeployment was shorter, but took at least 30 minutes. Usually automated build tests should be short and provide immediate feedback. Nightly testing can be longer and more thorough.

Lesson 3: Automated testing is essential for reliability. It takes away human error and allows for easily spotting regressions from newly checked in code. This helps prevent bugs being deployed to production.

After we moved to individual developer environments, we quickly realized that cost could easily spiral out of control. As I mentioned, a single deployment consisted of no less than 6 servers, including RDS. We slimmed this down to a single AZ RDS instance, only deployed search servers if we needed to, and optionally could deploy a single application server. That left us with no more than 5, sometimes 3, and occasionally 2 servers for each developer. Even with small instances this was thousands of dollars per month. Arguably, the cost is worth it compared to a developer sitting idle. Regardless, we looked for ways to save money.

We started by using auto scaling scheduled actions to turn off development EC2 instances outside of business hours (we left them on for 9 hours each day, with the times configurable). As a well-architected system, our servers were in an auto scaling group to handle additional load. This easily reduced our cost by about 73%. This only worked for EC2 instances in auto scaling groups, not RDS. To stop and start RDS, we used the AWS Instance Scheduler, which uses AWS Lambda and Amazon DynamoDB. Using the instance scheduler, we could also schedule RDS to turn off and on with a schedule. We did have to turn these on before the application servers came online since it takes a while for an RDS instance to become available. Overall, this resulted in considerable savings on our AWS bill. We were not able to do it at the time, because we used RHEL for our application servers, but spot instances are another great way to save even more. We also were in the process of switch from RHEL to Ubuntu. RHEL instances are more expensive due to support costs from Red Hat.

Lesson 4: Build in cost savings from the beginning. Everything we did for our development environments was instantly applicable to all higher level environments like dev, test, perf, and production. Obviously, you wouldn’t want to limit the running time of production, but often dev, test, and perf only need to run during business hours.

I’ve purposefully left out some very basic principles around security and development best practices. Here are a few in no particular order:

Lesson 5: Use version control and short lived branches, for example GitHub Flow.

Lesson 6: Your systems should use encryption in transit and encryption at rest ALWAYS.

Lesson 7: Everything should be automated, from deployments to upgrades, testing, and anything else you can think of.

Lesson 8: Limit access to servers. We had minimal access to production servers, basically the ability to troubleshoot only. To get a down system running we could make changes directly, but given the auto scaling nature of our servers, every change also needed to be committed in code before it was truly fixed. Immutable servers are gaining traction with no ability to even SSH into instances.